|

Decoding Methods

In coding theory, decoding is the process of translating received messages into codewords of a given code. There have been many common methods of mapping messages to codewords. These are often used to recover messages sent over a noisy channel, such as a binary symmetric channel. Notation C \subset \mathbb_2^n is considered a binary code with the length n; x,y shall be elements of \mathbb_2^n; and d(x,y) is the distance between those elements. Ideal observer decoding One may be given the message x \in \mathbb_2^n, then ideal observer decoding generates the codeword y \in C. The process results in this solution: :\mathbb(y \mbox \mid x \mbox) For example, a person can choose the codeword y that is most likely to be received as the message x after transmission. Decoding conventions Each codeword does not have an expected possibility: there may be more than one codeword with an equal likelihood of mutating into the received message. In such a case, the sender and receiver(s) must a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

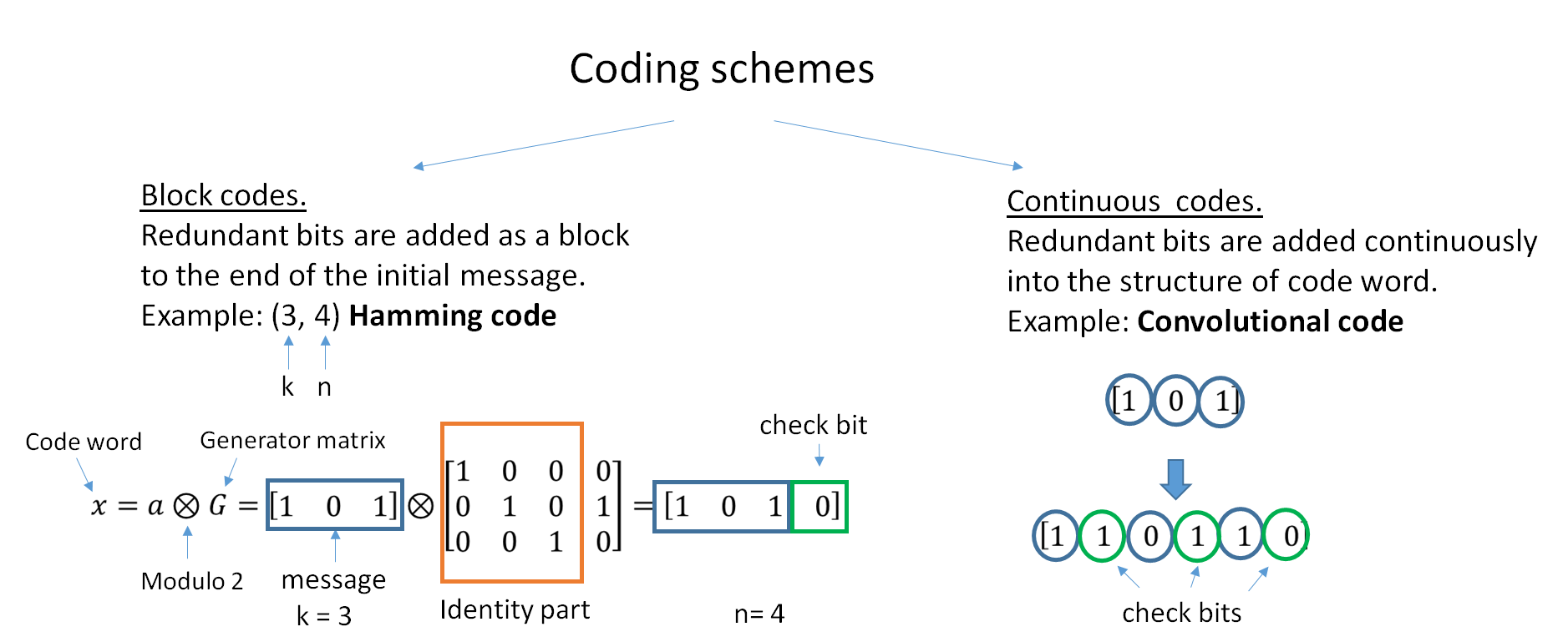

Coding Theory

Coding theory is the study of the properties of codes and their respective fitness for specific applications. Codes are used for data compression, cryptography, error detection and correction, data transmission and data storage. Codes are studied by various scientific disciplines—such as information theory, electrical engineering, mathematics, linguistics, and computer science—for the purpose of designing efficient and reliable data transmission methods. This typically involves the removal of redundancy and the correction or detection of errors in the transmitted data. There are four types of coding: # Data compression (or ''source coding'') # Error control (or ''channel coding'') # Cryptographic coding # Line coding Data compression attempts to remove unwanted redundancy from the data from a source in order to transmit it more efficiently. For example, ZIP data compression makes data files smaller, for purposes such as to reduce Internet traffic. Data compression and er ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Syndrome Decoding

In coding theory, decoding is the process of translating received messages into codewords of a given code. There have been many common methods of mapping messages to codewords. These are often used to recover messages sent over a noisy channel, such as a binary symmetric channel. Notation C \subset \mathbb_2^n is considered a binary code with the length n; x,y shall be elements of \mathbb_2^n; and d(x,y) is the distance between those elements. Ideal observer decoding One may be given the message x \in \mathbb_2^n, then ideal observer decoding generates the codeword y \in C. The process results in this solution: :\mathbb(y \mbox \mid x \mbox) For example, a person can choose the codeword y that is most likely to be received as the message x after transmission. Decoding conventions Each codeword does not have an expected possibility: there may be more than one codeword with an equal likelihood of mutating into the received message. In such a case, the sender and receiver(s) must ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cambridge University Press

Cambridge University Press is the university press of the University of Cambridge. Granted letters patent by King Henry VIII in 1534, it is the oldest university press in the world. It is also the King's Printer. Cambridge University Press is a department of the University of Cambridge and is both an academic and educational publisher. It became part of Cambridge University Press & Assessment, following a merger with Cambridge Assessment in 2021. With a global sales presence, publishing hubs, and offices in more than 40 countries, it publishes over 50,000 titles by authors from over 100 countries. Its publishing includes more than 380 academic journals, monographs, reference works, school and university textbooks, and English language teaching and learning publications. It also publishes Bibles, runs a bookshop in Cambridge, sells through Amazon, and has a conference venues business in Cambridge at the Pitt Building and the Sir Geoffrey Cass Sports and Social Centre. ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE Transactions On Information Theory

''IEEE Transactions on Information Theory'' is a monthly peer-reviewed scientific journal published by the IEEE Information Theory Society. It covers information theory and the mathematics of communications. It was established in 1953 as ''IRE Transactions on Information Theory''. The editor-in-chief is Muriel Médard (Massachusetts Institute of Technology). As of 2007, the journal allows the posting of preprints on arXiv. According to Jack van Lint, it is the leading research journal in the whole field of coding theory. A 2006 study using the PageRank network analysis algorithm found that, among hundreds of computer science-related journals, ''IEEE Transactions on Information Theory'' had the highest ranking and was thus deemed the most prestigious. ''ACM Computing Surveys'', with the highest impact factor The impact factor (IF) or journal impact factor (JIF) of an academic journal is a scientometric index calculated by Clarivate that reflects the yearly mean number of citat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Forbidden Input

In digital logic, a don't-care term (abbreviated DC, historically also known as ''redundancies'', ''irrelevancies'', ''optional entries'', ''invalid combinations'', ''vacuous combinations'', ''forbidden combinations'', ''unused states'' or ''logical remainders'') for a function is an input-sequence (a series of bits) for which the function output does not matter. An input that is known never to occur is a can't-happen term. Both these types of conditions are treated the same way in logic design and may be referred to collectively as ''don't-care conditions'' for brevity. The designer of a logic circuit to implement the function need not care about such inputs, but can choose the circuit's output arbitrarily, usually such that the simplest circuit results ( minimization). Don't-care terms are important to consider in minimizing logic circuit design, including graphical methods like Karnaugh–Veitch maps and algebraic methods such as the Quine–McCluskey algorithm. In 1958, S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Error Detection And Correction

In information theory and coding theory with applications in computer science and telecommunication, error detection and correction (EDAC) or error control are techniques that enable reliable delivery of digital data over unreliable communication channels. Many communication channels are subject to channel noise, and thus errors may be introduced during transmission from the source to a receiver. Error detection techniques allow detecting such errors, while error correction enables reconstruction of the original data in many cases. Definitions ''Error detection'' is the detection of errors caused by noise or other impairments during transmission from the transmitter to the receiver. ''Error correction'' is the detection of errors and reconstruction of the original, error-free data. History In classical antiquity, copyists of the Hebrew Bible were paid for their work according to the number of stichs (lines of verse). As the prose books of the Bible were hardly ever ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Don't Care Alarm

In digital logic, a don't-care term (abbreviated DC, historically also known as ''redundancies'', ''irrelevancies'', ''optional entries'', ''invalid combinations'', ''vacuous combinations'', ''forbidden combinations'', ''unused states'' or ''logical remainders'') for a function is an input-sequence (a series of bits) for which the function output does not matter. An input that is known never to occur is a can't-happen term. Both these types of conditions are treated the same way in logic design and may be referred to collectively as ''don't-care conditions'' for brevity. The designer of a logic circuit to implement the function need not care about such inputs, but can choose the circuit's output arbitrarily, usually such that the simplest circuit results ( minimization). Don't-care terms are important to consider in minimizing logic circuit design, including graphical methods like Karnaugh–Veitch maps and algebraic methods such as the Quine–McCluskey algorithm. In 1958, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Euclidean Distance

In mathematics, the Euclidean distance between two points in Euclidean space is the length of a line segment between the two points. It can be calculated from the Cartesian coordinates of the points using the Pythagorean theorem, therefore occasionally being called the Pythagorean distance. These names come from the ancient Greek mathematicians Euclid and Pythagoras, although Euclid did not represent distances as numbers, and the connection from the Pythagorean theorem to distance calculation was not made until the 18th century. The distance between two objects that are not points is usually defined to be the smallest distance among pairs of points from the two objects. Formulas are known for computing distances between different types of objects, such as the distance from a point to a line. In advanced mathematics, the concept of distance has been generalized to abstract metric spaces, and other distances than Euclidean have been studied. In some applications in statistic ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Forward Error Correction

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PRML

In computer data storage, partial-response maximum-likelihood (PRML) is a method for recovering the digital data from the weak analog read-back signal picked up by the head of a magnetic disk drive or tape drive. PRML was introduced to recover data more reliably or at a greater areal-density than earlier simpler schemes such as peak-detection. These advances are important because most of the digital data in the world is stored using magnetic storage on hard disk or tape drives. Ampex introduced PRML in a tape drive in 1984. IBM introduced PRML in a disk drive in 1990 and also coined the acronym PRML. Many advances have taken place since the initial introduction. Recent read/write channels operate at much higher data-rates, are fully adaptive, and, in particular, include the ability to handle nonlinear signal distortion and non-stationary, colored, data-dependent noise ( PDNP or NPML). ''Partial response'' refers to the fact that part of the response to an individual b ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Anne Canteaut

Anne Canteaut is a French researcher in cryptography, working at the French Institute for Research in Computer Science and Automation (INRIA) in Paris. She studies the design and cryptanalysis of symmetric-key algorithms and S-boxes. Education and career Canteaut earned a diploma in engineering from ENSTA Paris in 1993. She completed her doctorate at Pierre and Marie Curie University in 1996, with the dissertation ''Attaques de cryptosystèmes à mots de poids faible et construction de fonctions t-résilientes'' supervised by . She is currently the chair of the INRIA Evaluation Committee, and of the FSE steering committee. She was the scientific leader of the INRIA team SECRET between 2007 and 2019. Cryptographic primitives Canteaut has contributed to the design of several new cryptographic primitives: * DECIM, a stream cipher submitted to the eSTREAM project * SOSEMANUK, a stream cipher selected in the eSTREAM portfolio * Shabal, a hash function submitted to the SHA-3 co ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Las Vegas Algorithm

In computing, a Las Vegas algorithm is a randomized algorithm that always gives correct results; that is, it always produces the correct result or it informs about the failure. However, the runtime of a Las Vegas algorithm differs depending on the input. The usual definition of a Las Vegas algorithm includes the restriction that the ''expected'' runtime be finite, where the expectation is carried out over the space of random information, or entropy, used in the algorithm. An alternative definition requires that a Las Vegas algorithm always terminates (is effective), but may output a symbol not part of the solution space to indicate failure in finding a solution. The nature of Las Vegas algorithms makes them suitable in situations where the number of possible solutions is limited, and where verifying the correctness of a candidate solution is relatively easy while finding a solution is complex. Las Vegas algorithms are prominent in the field of artificial intelligence, and in other ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |