|

Parallel Computing

Parallel computing is a type of computing, computation in which many calculations or Process (computing), processes are carried out simultaneously. Large problems can often be divided into smaller ones, which can then be solved at the same time. There are several different forms of parallel computing: Bit-level parallelism, bit-level, Instruction-level parallelism, instruction-level, Data parallelism, data, and task parallelism. Parallelism has long been employed in high-performance computing, but has gained broader interest due to the physical constraints preventing frequency scaling.S.V. Adve ''et al.'' (November 2008)"Parallel Computing Research at Illinois: The UPCRC Agenda" (PDF). Parallel@Illinois, University of Illinois at Urbana-Champaign. "The main techniques for these performance benefits—increased clock frequency and smarter but increasingly complex architectures—are now hitting the so-called power wall. The computer industry has accepted that future performance inc ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Synchronization (computer Science)

In computer science, synchronization is the task of coordinating multiple processes to join up or handshake at a certain point, in order to reach an agreement or commit to a certain sequence of action. Motivation The need for synchronization does not arise merely in multi-processor systems but for any kind of concurrent processes; even in single processor systems. Mentioned below are some of the main needs for synchronization: '' Forks and Joins:'' When a job arrives at a fork point, it is split into N sub-jobs which are then serviced by n tasks. After being serviced, each sub-job waits until all other sub-jobs are done processing. Then, they are joined again and leave the system. Thus, parallel programming requires synchronization as all the parallel processes wait for several other processes to occur. '' Producer-Consumer:'' In a producer-consumer relationship, the consumer process is dependent on the producer process until the necessary data has been produced. ''Exclusiv ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

IBM Blue Gene P Supercomputer

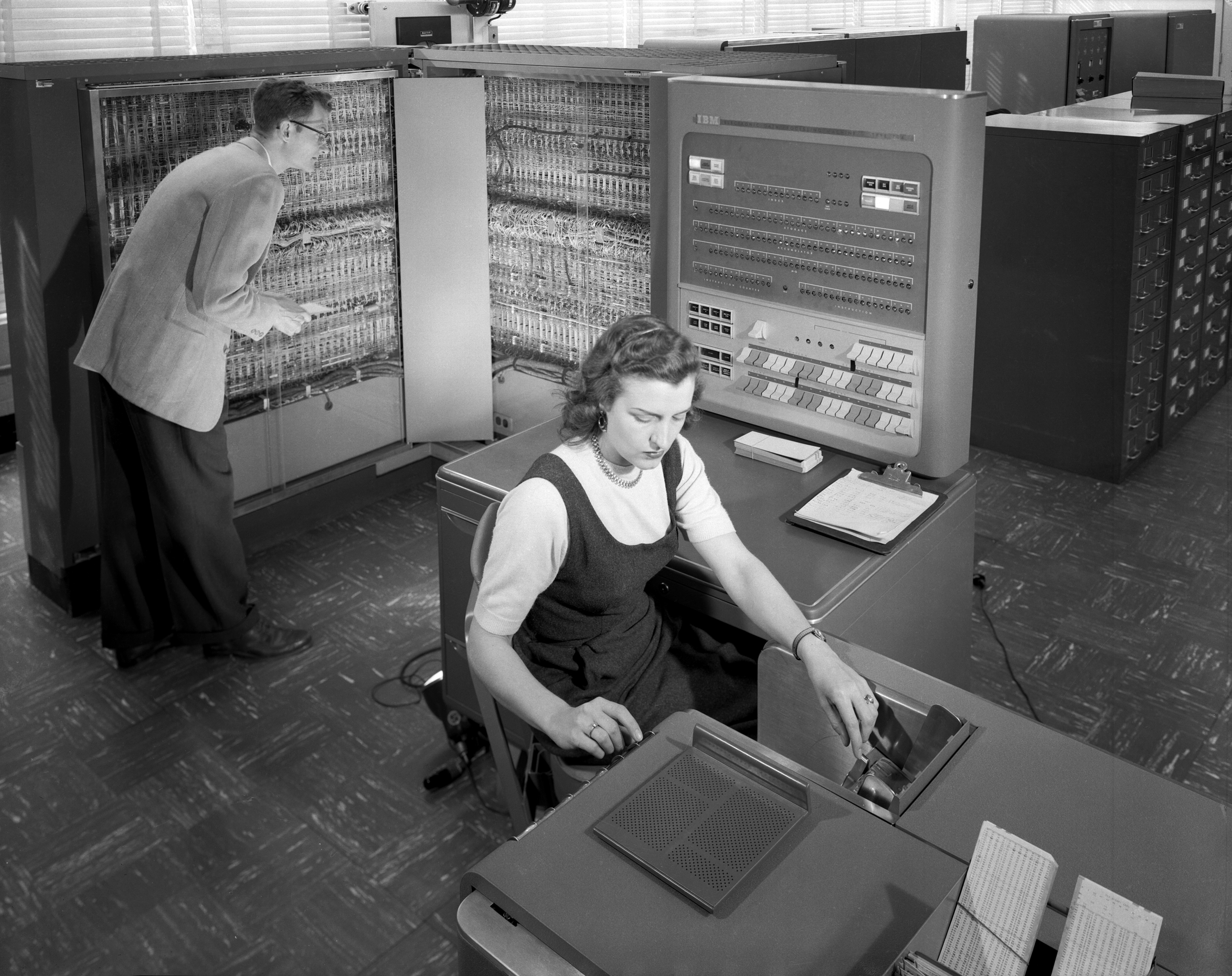

International Business Machines Corporation (using the trademark IBM), nicknamed Big Blue, is an American Multinational corporation, multinational technology company headquartered in Armonk, New York, and present in over 175 countries. It is a publicly traded company and one of the 30 companies in the Dow Jones Industrial Average. IBM is the largest industrial research organization in the world, with 19 research facilities across a dozen countries; for 29 consecutive years, from 1993 to 2021, it held the record for most annual U.S. patents generated by a business. IBM was founded in 1911 as the Computing-Tabulating-Recording Company (CTR), a holding company of manufacturers of record-keeping and measuring systems. It was renamed "International Business Machines" in 1924 and soon became the leading manufacturer of Tabulating machine, punch-card tabulating systems. During the 1960s and 1970s, the IBM mainframe, exemplified by the IBM System/360, System/360 and its successors, wa ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Multi-core Processor

A multi-core processor (MCP) is a microprocessor on a single integrated circuit (IC) with two or more separate central processing units (CPUs), called ''cores'' to emphasize their multiplicity (for example, ''dual-core'' or ''quad-core''). Each core reads and executes Instruction set, program instructions, specifically ordinary Instruction set, CPU instructions (such as add, move data, and branch). However, the MCP can run instructions on separate cores at the same time, increasing overall speed for programs that support Multithreading (computer architecture), multithreading or other parallel computing techniques. Manufacturers typically integrate the cores onto a single IC Die (integrated circuit), die, known as a ''chip multiprocessor'' (CMP), or onto multiple dies in a single Chip carrier, chip package. As of 2024, the microprocessors used in almost all new personal computers are multi-core. A multi-core processor implements multiprocessing in a single physical package. Des ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Upper Bound

In mathematics, particularly in order theory, an upper bound or majorant of a subset of some preordered set is an element of that is every element of . Dually, a lower bound or minorant of is defined to be an element of that is less than or equal to every element of . A set with an upper (respectively, lower) bound is said to be bounded from above or majorized (respectively bounded from below or minorized) by that bound. The terms bounded above (bounded below) are also used in the mathematical literature for sets that have upper (respectively lower) bounds. Examples For example, is a lower bound for the set (as a subset of the integers or of the real numbers, etc.), and so is . On the other hand, is not a lower bound for since it is not smaller than every element in . and other numbers ''x'' such that would be an upper bound for ''S''. The set has as both an upper bound and a lower bound; all other numbers are either an upper bound or a lower bound for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Computer Networking

A computer network is a collection of communicating computers and other devices, such as printers and smart phones. In order to communicate, the computers and devices must be connected by wired media like copper cables, optical fibers, or by wireless communication. The devices may be connected in a variety of network topologies. In order to communicate over the network, computers use agreed-on rules, called communication protocols, over whatever medium is used. The computer network can include personal computers, Server (computing), servers, networking hardware, or other specialized or general-purpose Host (network), hosts. They are identified by network addresses and may have hostnames. Hostnames serve as memorable labels for the nodes and are rarely changed after initial assignment. Network addresses serve for locating and identifying the nodes by communication protocols such as the Internet Protocol. Computer networks may be classified by many criteria, including the tr ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Race Condition

A race condition or race hazard is the condition of an electronics, software, or other system where the system's substantive behavior is dependent on the sequence or timing of other uncontrollable events, leading to unexpected or inconsistent results. It becomes a bug when one or more of the possible behaviors is undesirable. The term ''race condition'' was already in use by 1954, for example in David A. Huffman's doctoral thesis "The synthesis of sequential switching circuits". Race conditions can occur especially in logic circuits or multithreaded or distributed software programs. Using mutual exclusion can prevent race conditions in distributed software systems. In electronics A typical example of a race condition may occur when a logic gate combines signals that have traveled along different paths from the same source. The inputs to the gate can change at slightly different times in response to a change in the source signal. The output may, for a brief period, chan ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Software Bug

A software bug is a design defect ( bug) in computer software. A computer program with many or serious bugs may be described as ''buggy''. The effects of a software bug range from minor (such as a misspelled word in the user interface) to severe (such as frequent crashing). In 2002, a study commissioned by the US Department of Commerce's National Institute of Standards and Technology concluded that "software bugs, or errors, are so prevalent and so detrimental that they cost the US economy an estimated $59 billion annually, or about 0.6 percent of the gross domestic product". Since the 1950s, some computer systems have been designed to detect or auto-correct various software errors during operations. History Terminology ''Mistake metamorphism'' (from Greek ''meta'' = "change", ''morph'' = "form") refers to the evolution of a defect in the final stage of software deployment. Transformation of a ''mistake'' committed by an analyst in the early stages of the softw ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

Sequential Algorithm

In computer science, a sequential algorithm or serial algorithm is an algorithm that is executed sequentially – once through, from start to finish, without other processing executing – as opposed to concurrently or in parallel. The term is primarily used to contrast with '' concurrent algorithm'' or '' parallel algorithm;'' most standard computer algorithms are sequential algorithms, and not specifically identified as such, as sequentialness is a background assumption. Concurrency and parallelism are in general distinct concepts, but they often overlap – many distributed algorithms are both concurrent and parallel – and thus "sequential" is used to contrast with both, without distinguishing which one. If these need to be distinguished, the opposing pairs sequential/concurrent and serial/parallel may be used. "Sequential algorithm" may also refer specifically to an algorithm for decoding a convolutional code. See also * Online algorithm * Streaming algorithm In computer ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Parallel Algorithm

In computer science, a parallel algorithm, as opposed to a traditional serial algorithm, is an algorithm which can do multiple operations in a given time. It has been a tradition of computer science to describe serial algorithms in abstract machine models, often the one known as random-access machine. Similarly, many computer science researchers have used a so-called parallel random-access machine (PRAM) as a parallel abstract machine (shared-memory). Many parallel algorithms are executed concurrently – though in general concurrent algorithms are a distinct concept – and thus these concepts are often conflated, with which aspect of an algorithm is parallel and which is concurrent not being clearly distinguished. Further, non-parallel, non-concurrent algorithms are often referred to as " sequential algorithms", by contrast with concurrent algorithms. Parallelizability Algorithms vary significantly in how parallelizable they are, ranging from easily parallelizable to compl ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Grid Computing

Grid computing is the use of widely distributed computer resources to reach a common goal. A computing grid can be thought of as a distributed system with non-interactive workloads that involve many files. Grid computing is distinguished from conventional high-performance computing systems such as cluster computing in that grid computers have each node set to perform a different task/application. Grid computers also tend to be more heterogeneous and geographically dispersed (thus not physically coupled) than cluster computers. Although a single grid can be dedicated to a particular application, commonly a grid is used for a variety of purposes. Grids are often constructed with general-purpose grid middleware software libraries. Grid sizes can be quite large. Grids are a form of distributed computing composed of many networked loosely coupled computers acting together to perform large tasks. For certain applications, distributed or grid computing can be seen as a special ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Massively Parallel (computing)

Massively may refer to: *Mass Mass is an Intrinsic and extrinsic properties, intrinsic property of a physical body, body. It was traditionally believed to be related to the physical quantity, quantity of matter in a body, until the discovery of the atom and particle physi ... * Massively (blog), a blog about MMOs {{disambiguation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

|

|

Computer Cluster

A computer cluster is a set of computers that work together so that they can be viewed as a single system. Unlike grid computers, computer clusters have each node set to perform the same task, controlled and scheduled by software. The newest manifestation of cluster computing is cloud computing. The components of a cluster are usually connected to each other through fast local area networks, with each node (computer used as a server) running its own instance of an operating system. In most circumstances, all of the nodes use the same hardware and the same operating system, although in some setups (e.g. using Open Source Cluster Application Resources (OSCAR)), different operating systems can be used on each computer, or different hardware. Clusters are usually deployed to improve performance and availability over that of a single computer, while typically being much more cost-effective than single computers of comparable speed or availability. Computer clusters emerged as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |