|

Existential Risk From Artificial General Intelligence

Existential risk from artificial intelligence refers to the idea that substantial progress in artificial general intelligence (AGI) could lead to human extinction or an irreversible global catastrophe. One argument for the importance of this risk references how human beings dominate other species because the human brain possesses distinctive capabilities other animals lack. If AI were to surpass human intelligence and become superintelligent, it might become uncontrollable. Just as the fate of the mountain gorilla depends on human goodwill, the fate of humanity could depend on the actions of a future machine superintelligence. The plausibility of existential catastrophe due to AI is widely debated. It hinges in part on whether AGI or superintelligence are achievable, the speed at which dangerous capabilities and behaviors emerge, and whether practical scenarios for AI takeovers exist. Concerns about superintelligence have been voiced by computer scientists and tech CEOs such ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial General Intelligence

Artificial general intelligence (AGI)—sometimes called human‑level intelligence AI—is a type of artificial intelligence that would match or surpass human capabilities across virtually all cognitive tasks. Some researchers argue that state‑of‑the‑art large language models already exhibit early signs of AGI‑level capability, while others maintain that genuine AGI has not yet been achieved. AGI is conceptually distinct from artificial superintelligence (ASI), which would outperform the best human abilities across every domain by a wide margin. AGI is considered one of the definitions of Chinese room#Strong AI vs. AI research, strong AI. Unlike artificial narrow intelligence (ANI), whose competence is confined to well‑defined tasks, an AGI system can generalise knowledge, transfer skills between domains, and solve novel problems without task‑specific reprogramming. The concept does not, in principle, require the system to be an autonomous agent; a static model— ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

The Guardian

''The Guardian'' is a British daily newspaper. It was founded in Manchester in 1821 as ''The Manchester Guardian'' and changed its name in 1959, followed by a move to London. Along with its sister paper, ''The Guardian Weekly'', ''The Guardian'' is part of the Guardian Media Group, owned by the Scott Trust Limited. The trust was created in 1936 to "secure the financial and editorial independence of ''The Guardian'' in perpetuity and to safeguard the journalistic freedom and liberal values of ''The Guardian'' free from commercial or political interference". The trust was converted into a limited company in 2008, with a constitution written so as to maintain for ''The Guardian'' the same protections as were built into the structure of the Scott Trust by its creators. Profits are reinvested in its journalism rather than distributed to owners or shareholders. It is considered a newspaper of record in the UK. The editor-in-chief Katharine Viner succeeded Alan Rusbridger in 2015. S ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Computer Scientist

A computer scientist is a scientist who specializes in the academic study of computer science. Computer scientists typically work on the theoretical side of computation. Although computer scientists can also focus their work and research on specific areas (such as algorithm and data structure development and design, software engineering, information theory, database theory, theoretical computer science, numerical analysis, programming language theory, compiler, computer graphics, computer vision, robotics, computer architecture, operating system), their foundation is the theoretical study of computing from which these other fields derive. A primary goal of computer scientists is to develop or validate models, often mathematical, to describe the properties of computational systems (Processor (computing), processors, programs, computers interacting with people, computers interacting with other computers, etc.) with an overall objective of discovering designs that yield useful ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AI Alignment

In the field of artificial intelligence (AI), alignment aims to steer AI systems toward a person's or group's intended goals, preferences, or ethical principles. An AI system is considered ''aligned'' if it advances the intended objectives. A ''misaligned'' AI system pursues unintended objectives. It is often challenging for AI designers to align an AI system because it is difficult for them to specify the full range of desired and undesired behaviors. Therefore, AI designers often use simpler ''proxy goals'', such as Reinforcement learning from human feedback, gaining human approval. But proxy goals can overlook necessary constraints or reward the AI system for merely ''appearing'' aligned. AI systems may also find loopholes that allow them to accomplish their proxy goals efficiently but in unintended, sometimes harmful, ways (reward hacking). Advanced AI systems may develop unwanted Instrumental convergence, instrumental strategies, such as seeking power or survival because s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AI Capability Control

In the field of artificial intelligence (AI) design, AI capability control proposals, also referred to as AI confinement, aim to increase our ability to monitor and control the behavior of AI systems, including proposed artificial general intelligences (AGIs), in order to reduce the danger they might pose if misaligned. However, capability control becomes less effective as agents become more intelligent and their ability to exploit flaws in human control systems increases, potentially resulting in an existential risk from AGI. Therefore, the Oxford philosopher Nick Bostrom and others recommend capability control methods only as a supplement to alignment methods. Motivation Some hypothetical intelligence technologies, like "seed AI", are postulated to be able to make themselves faster and more intelligent by modifying their source code. These improvements would make further improvements possible, which would in turn make further iterative improvements possible, and so on, leadi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Regulation Of Artificial Intelligence

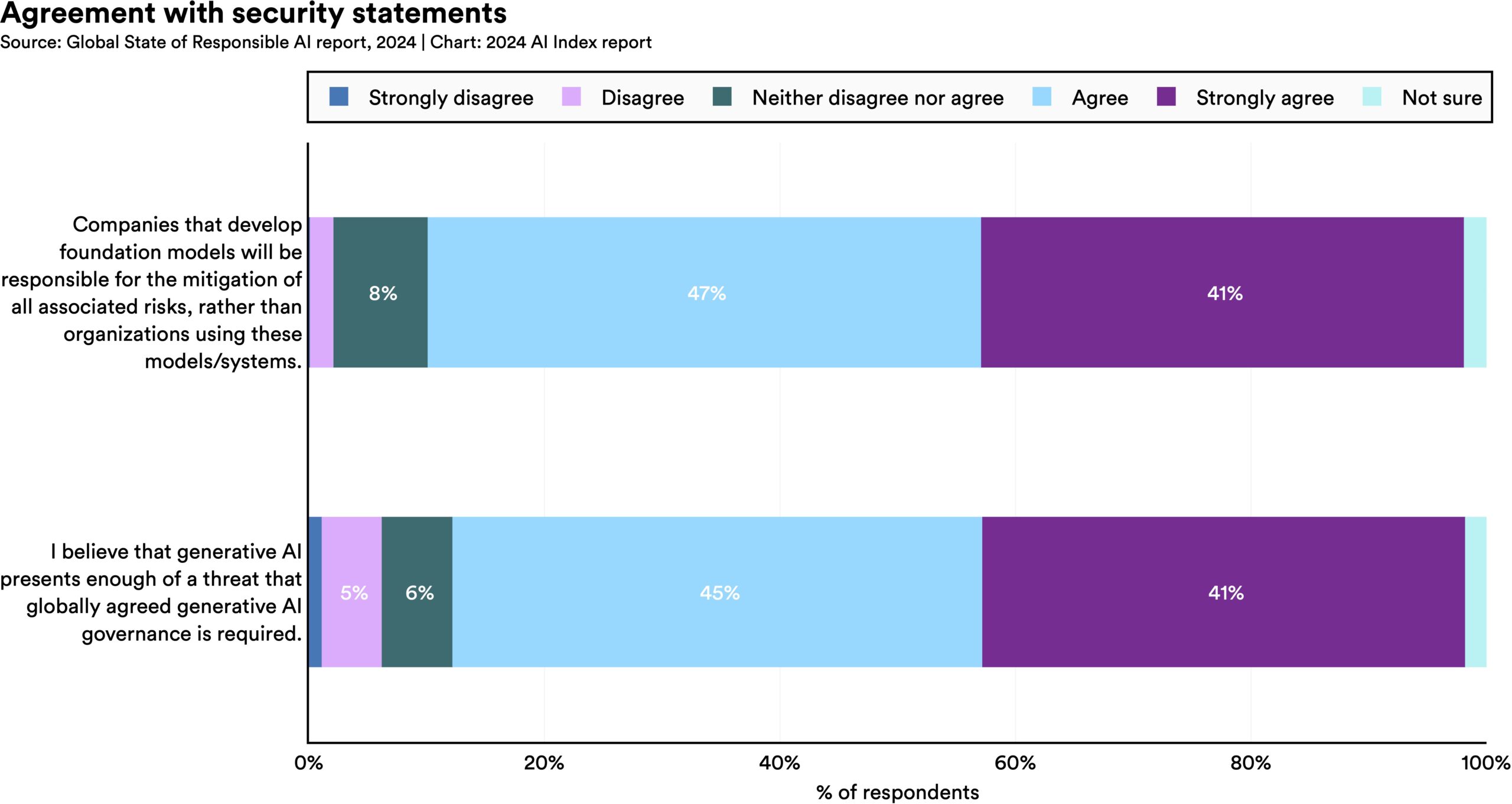

Regulation of artificial intelligence is the development of public sector policies and laws for promoting and regulating artificial intelligence (AI). It is part of the broader regulation of algorithms. The regulatory and policy landscape for AI is an emerging issue in jurisdictions worldwide, including for international organizations without direct enforcement power like the IEEE or the OECD. Since 2016, numerous AI ethics guidelines have been published in order to maintain social control over the technology. Regulation is deemed necessary to both foster AI innovation and manage associated risks. Furthermore, organizations deploying AI have a central role to play in creating and implementing trustworthy AI, adhering to established principles, and taking accountability for mitigating risks. Regulating AI through mechanisms such as review boards can also be seen as social means to approach the AI control problem. Background According to Stanford University's 2025 AI Index, ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |