|

Computational Toxicology

Computational toxicology is a multidisciplinary field and area of study, which is employed in the early stages of drug discovery and development to predict the safety and potential toxicity of drug candidates. It integrates ''in silico'' methods, or computer-based models, with ''in vivo'', or animal, and ''in vitro'', or cell-based, approaches to achieve a more efficient, reliable, and ethically responsible toxicity evaluation process. Key aspects of computational toxicology include the following: early safety prediction, mechanism-oriented modeling, integration with experimental approaches, and structure-based algorithms. Sean Ekins is a forerunner in the field of computational toxicology among other fields.Ekins, Sean (Editor). *Computational Toxicology: Risk Assessment for Chemicals (Wiley Series on Technologies for the Pharmaceutical Industry)*. 1st ed., Wiley, February 13, 2018. ISBN 978-1119282563. Historical development The origins of computational toxicology trace back t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Drug Discovery

In the fields of medicine, biotechnology, and pharmacology, drug discovery is the process by which new candidate medications are discovered. Historically, drugs were discovered by identifying the active ingredient from traditional remedies or by serendipitous discovery, as with penicillin. More recently, chemical libraries of synthetic small molecules, natural products, or extracts were screened in intact cells or whole organisms to identify substances that had a desirable therapeutic effect in a process known as classical pharmacology. After sequencing of the human genome allowed rapid cloning and synthesis of large quantities of purified proteins, it has become common practice to use high throughput screening of large compounds libraries against isolated biological targets which are hypothesized to be disease-modifying in a process known as reverse pharmacology. Hits from these screens are then tested in cells and then in animals for efficacy. Modern drug discovery i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bioinformatics

Bioinformatics () is an interdisciplinary field of science that develops methods and Bioinformatics software, software tools for understanding biological data, especially when the data sets are large and complex. Bioinformatics uses biology, chemistry, physics, computer science, data science, computer programming, information engineering, mathematics and statistics to analyze and interpret biological data. The process of analyzing and interpreting data can sometimes be referred to as computational biology, however this distinction between the two terms is often disputed. To some, the term ''computational biology'' refers to building and using models of biological systems. Computational, statistical, and computer programming techniques have been used for In silico, computer simulation analyses of biological queries. They include reused specific analysis "pipelines", particularly in the field of genomics, such as by the identification of genes and single nucleotide polymorphis ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Ethics

Ethics is the philosophy, philosophical study of Morality, moral phenomena. Also called moral philosophy, it investigates Normativity, normative questions about what people ought to do or which behavior is morally right. Its main branches include normative ethics, applied ethics, and metaethics. Normative ethics aims to find general principles that govern how people should act. Applied ethics examines concrete ethical problems in real-life situations, such as abortion, treatment of animals, and Business ethics, business practices. Metaethics explores the underlying assumptions and concepts of ethics. It asks whether there are objective moral facts, how moral knowledge is possible, and how moral judgments motivate people. Influential normative theories are consequentialism, deontology, and virtue ethics. According to consequentialists, an act is right if it leads to the best consequences. Deontologists focus on acts themselves, saying that they must adhere to Duty, duties, like t ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Environmental Protection

Environmental protection, or environment protection, refers to the taking of measures to protecting the natural environment, prevent pollution and maintain ecological balance. Action may be taken by individuals, advocacy groups and governments. Objectives include the conservation of the existing natural environment and natural resources and, when possible, repair of damage and reversal of harmful trends. Due to the pressures of overconsumption, population growth and technology, the biophysical environment is being degraded, sometimes permanently. This has been recognized, and governments have begun placing restraints on activities that cause environmental degradation. Since the 1960s, environmental movements have created more awareness of the multiple environmental problems. There is disagreement on the extent of the environmental impact of human activity, so protection measures are occasionally debated. Approaches Voluntary agreements In industrial countries, voluntary ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Big Data

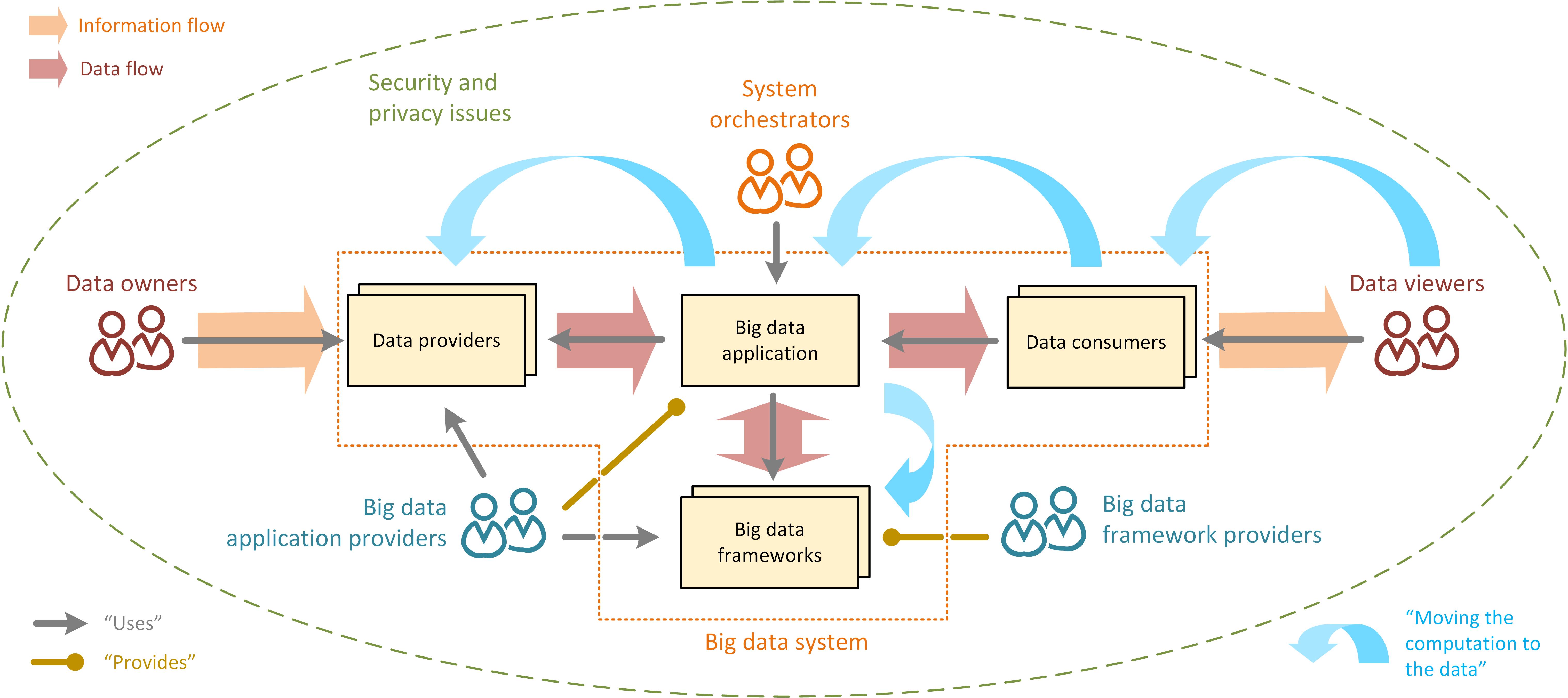

Big data primarily refers to data sets that are too large or complex to be dealt with by traditional data processing, data-processing application software, software. Data with many entries (rows) offer greater statistical power, while data with higher complexity (more attributes or columns) may lead to a higher false discovery rate. Big data analysis challenges include Automatic identification and data capture, capturing data, Computer data storage, data storage, data analysis, search, Data sharing, sharing, Data transmission, transfer, Data visualization, visualization, Query language, querying, updating, information privacy, and data source. Big data was originally associated with three key concepts: ''volume'', ''variety'', and ''velocity''. The analysis of big data presents challenges in sampling, and thus previously allowing for only observations and sampling. Thus a fourth concept, ''veracity,'' refers to the quality or insightfulness of the data. Without sufficient investm ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Artificial Intelligence

Artificial intelligence (AI) is the capability of computer, computational systems to perform tasks typically associated with human intelligence, such as learning, reasoning, problem-solving, perception, and decision-making. It is a field of research in computer science that develops and studies methods and software that enable machines to machine perception, perceive their environment and use machine learning, learning and intelligence to take actions that maximize their chances of achieving defined goals. High-profile applications of AI include advanced web search engines (e.g., Google Search); recommendation systems (used by YouTube, Amazon (company), Amazon, and Netflix); virtual assistants (e.g., Google Assistant, Siri, and Amazon Alexa, Alexa); autonomous vehicles (e.g., Waymo); Generative artificial intelligence, generative and Computational creativity, creative tools (e.g., ChatGPT and AI art); and Superintelligence, superhuman play and analysis in strategy games (e.g., ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Machine Learning

Machine learning (ML) is a field of study in artificial intelligence concerned with the development and study of Computational statistics, statistical algorithms that can learn from data and generalise to unseen data, and thus perform Task (computing), tasks without explicit Machine code, instructions. Within a subdiscipline in machine learning, advances in the field of deep learning have allowed Neural network (machine learning), neural networks, a class of statistical algorithms, to surpass many previous machine learning approaches in performance. ML finds application in many fields, including natural language processing, computer vision, speech recognition, email filtering, agriculture, and medicine. The application of ML to business problems is known as predictive analytics. Statistics and mathematical optimisation (mathematical programming) methods comprise the foundations of machine learning. Data mining is a related field of study, focusing on exploratory data analysi ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Animal Testing

Animal testing, also known as animal experimentation, animal research, and ''in vivo'' testing, is the use of animals, as model organisms, in experiments that seek answers to scientific and medical questions. This approach can be contrasted with field studies in which animals are observed in their natural environments or habitats. Experimental research with animals is usually conducted in universities, medical schools, pharmaceutical companies, defense establishments, and commercial facilities that provide animal-testing services to the industry. The focus of animal testing varies on a continuum from Basic research, pure research, focusing on developing fundamental knowledge of an organism, to applied research, which may focus on answering some questions of great practical importance, such as finding a cure for a disease. Examples of applied research include testing disease treatments, breeding, defense research, and Toxicology testing, toxicology, including Testing cosmetics ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

United States Environmental Protection Agency

The Environmental Protection Agency (EPA) is an independent agency of the United States government tasked with environmental protection matters. President Richard Nixon proposed the establishment of EPA on July 9, 1970; it began operation on December 2, 1970, after Nixon signed an executive order. The order establishing the EPA was ratified by committee hearings in the House and Senate. The agency is led by its administrator, who is appointed by the president and approved by the Senate. The current administrator is Lee Zeldin. The EPA is not a Cabinet department, but the administrator is normally given cabinet rank. The EPA has its headquarters in Washington, D.C. There are regional offices for each of the agency's ten regions, as well as 27 laboratories around the country. The agency conducts environmental assessment, research, and education. It has the responsibility of maintaining and enforcing national standards under a variety of environmental laws, in consultat ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

High-throughput Screening

High-throughput screening (HTS) is a method for scientific discovery especially used in drug discovery and relevant to the fields of biology, materials science and chemistry. Using robotics, data processing/control software, liquid handling devices, and sensitive detectors, high-throughput screening allows a researcher to quickly conduct millions of chemical, genetic, or pharmacological tests. Through this process one can quickly recognize active compounds, antibodies, or genes that modulate a particular biomolecular pathway. The results of these experiments provide starting points for drug design and for understanding the noninteraction or role of a particular location. Assay plate preparation The key labware or testing vessel of HTS is the microtiter plate, which is a small container, usually disposable and made of plastic, that features a grid of small, open divots called ''wells''. In general, microplates for HTS have either 96, 192, 384, 1536, 3456 or 6144 wells. These are a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Cheminformatics

Cheminformatics (also known as chemoinformatics) refers to the use of physical chemistry theory with computer and information science techniques—so called "'' in silico''" techniques—in application to a range of descriptive and prescriptive problems in the field of chemistry, including in its applications to biology and related molecular fields. Such '' in silico'' techniques are used, for example, by pharmaceutical companies and in academic settings to aid and inform the process of drug discovery, for instance in the design of well-defined combinatorial libraries of synthetic compounds, or to assist in structure-based drug design. The methods can also be used in chemical and allied industries, and such fields as environmental science and pharmacology, where chemical processes are involved or studied. History Cheminformatics has been an active field in various guises since the 1970s and earlier, with activity in academic departments and commercial pharmaceutical rese ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |