|

BCJR Algorithm

The BCJR algorithm is an algorithm for maximum a posteriori decoding of error correcting codes defined on trellises (principally convolutional codes). The algorithm is named after its inventors: Bahl, Cocke, Jelinek and Raviv.L.Bahl, J.Cocke, F.Jelinek, and J.Raviv, "Optimal Decoding of Linear Codes for minimizing symbol error rate", IEEE Transactions on Information Theory, vol. IT-20(2), pp. 284-287, March 1974. This algorithm is critical to modern iteratively-decoded error-correcting codes, including turbo codes and low-density parity-check codes. Steps involved Based on the trellis: * Compute forward probabilities \alpha * Compute backward probabilities \beta * Compute smoothed probabilities based on other information (i.e. noise variance for AWGN, bit crossover probability for binary symmetric channel) Variations SBGT BCJR Berrou, Glavieux and Thitimajshima simplification. Log-Map BCJR P. Robertson, P. Hoeher and E. Villebrun, "Optimal and Sub-Optimal Maximum A Poster ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of rigorous instructions, typically used to solve a class of specific problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can perform automated deductions (referred to as automated reasoning) and use mathematical and logical tests to divert the code execution through various routes (referred to as automated decision-making). Using human characteristics as descriptors of machines in metaphorical ways was already practiced by Alan Turing with terms such as "memory", "search" and "stimulus". In contrast, a heuristic is an approach to problem solving that may not be fully specified or may not guarantee correct or optimal results, especially in problem domains where there is no well-defined correct or optimal result. As an effective method, an algorithm can be expressed within a finite amount of space ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Maximum A Posteriori

In Bayesian statistics, a maximum a posteriori probability (MAP) estimate is an estimate of an unknown quantity, that equals the mode of the posterior distribution. The MAP can be used to obtain a point estimate of an unobserved quantity on the basis of empirical data. It is closely related to the method of maximum likelihood (ML) estimation, but employs an augmented optimization objective which incorporates a prior distribution (that quantifies the additional information available through prior knowledge of a related event) over the quantity one wants to estimate. MAP estimation can therefore be seen as a regularization of maximum likelihood estimation. Description Assume that we want to estimate an unobserved population parameter \theta on the basis of observations x. Let f be the sampling distribution of x, so that f(x\mid\theta) is the probability of x when the underlying population parameter is \theta. Then the function: :\theta \mapsto f(x \mid \theta) \! is known as ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

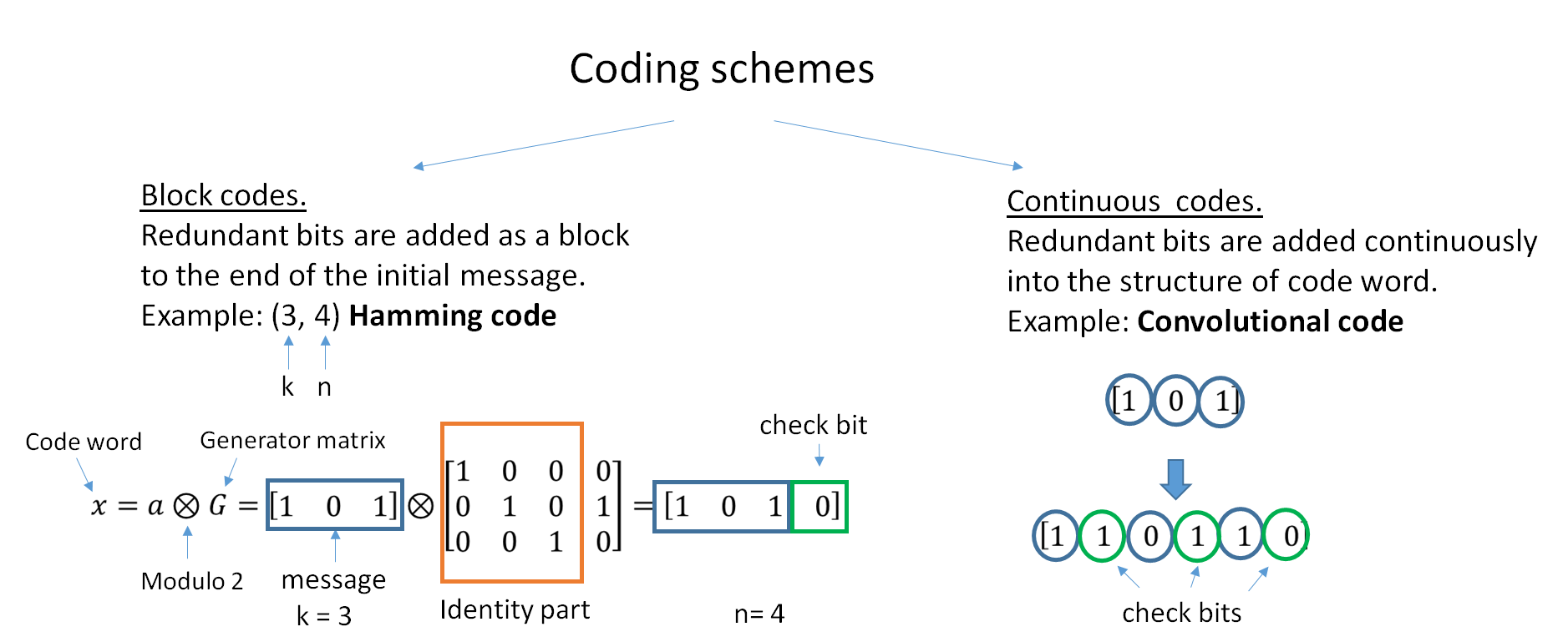

Error Correcting Code

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a hig ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Convolutional Code

In telecommunication, a convolutional code is a type of error-correcting code that generates parity symbols via the sliding application of a boolean polynomial function to a data stream. The sliding application represents the 'convolution' of the encoder over the data, which gives rise to the term 'convolutional coding'. The sliding nature of the convolutional codes facilitates trellis decoding using a time-invariant trellis. Time invariant trellis decoding allows convolutional codes to be maximum-likelihood soft-decision decoded with reasonable complexity. The ability to perform economical maximum likelihood soft decision decoding is one of the major benefits of convolutional codes. This is in contrast to classic block codes, which are generally represented by a time-variant trellis and therefore are typically hard-decision decoded. Convolutional codes are often characterized by the base code rate and the depth (or memory) of the encoder ,k,K/math>. The base code rate is ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Frederick Jelinek

Frederick Jelinek (18 November 1932 – 14 September 2010) was a Czech-American researcher in information theory, automatic speech recognition, and natural language processing. He is well known for his oft-quoted statement, "Every time I fire a linguist, the performance of the speech recognizer goes up". Jelinek was born in Czechoslovakia before World War II and emigrated with his family to the United States in the early years of the communist regime. He studied engineering at the Massachusetts Institute of Technology and taught for 10 years at Cornell University before accepting a job at IBM Research. In 1961, he married Czech screenwriter Milena Jelinek. At IBM, his team advanced approaches to computer speech recognition and machine translation. After IBM, he went to head the Center for Language and Speech Processing at Johns Hopkins University for 17 years, where he was still working on the day he died. Personal life Jelinek was born on November 18, 1932, as Bedřich ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Turbo Code

In information theory, turbo codes (originally in French ''Turbocodes'') are a class of high-performance forward error correction (FEC) codes developed around 1990–91, but first published in 1993. They were the first practical codes to closely approach the maximum channel capacity or Shannon limit, a theoretical maximum for the code rate at which reliable communication is still possible given a specific noise level. Turbo codes are used in 3G/ 4G mobile communications (e.g., in UMTS and LTE) and in ( deep space) satellite communications as well as other applications where designers seek to achieve reliable information transfer over bandwidth- or latency-constrained communication links in the presence of data-corrupting noise. Turbo codes compete with low-density parity-check (LDPC) codes, which provide similar performance. The name "turbo code" arose from the feedback loop used during normal turbo code decoding, which was analogized to the exhaust feedback used for engine ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Low-density Parity-check Code

In information theory, a low-density parity-check (LDPC) code is a linear error correcting code, a method of transmitting a message over a noisy transmission channel. An LDPC code is constructed using a sparse Tanner graph (subclass of the bipartite graph). LDPC codes are capacity-approaching codes, which means that practical constructions exist that allow the noise threshold to be set very close to the theoretical maximum (the Shannon limit) for a symmetric memoryless channel. The noise threshold defines an upper bound for the channel noise, up to which the probability of lost information can be made as small as desired. Using iterative belief propagation techniques, LDPC codes can be decoded in time linear to their block length. LDPC codes are finding increasing use in applications requiring reliable and highly efficient information transfer over bandwidth-constrained or return-channel-constrained links in the presence of corrupting noise. Implementation of LDPC codes has l ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Noise Variance

Noise is unwanted sound considered unpleasant, loud or disruptive to hearing. From a physics standpoint, there is no distinction between noise and desired sound, as both are vibrations through a medium, such as air or water. The difference arises when the brain receives and perceives a sound. Acoustic noise is any sound in the acoustic domain, either deliberate (e.g., music or speech) or unintended. In contrast, Noise (electronics), noise in electronics may not be audible to the human ear and may require instruments for detection. In audio engineering, noise can refer to the unwanted residual electronic noise signal that gives rise to acoustic noise heard as a Hiss (electromagnetic), hiss. This signal noise is commonly measured using A-weighting or ITU-R 468 noise weighting, ITU-R 468 weighting. In experimental sciences, noise can refer to any random fluctuations of data that hinders perception of a signal. Measurement Sound is measured based on the amplitude and frequency ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

AWGN

Additive white Gaussian noise (AWGN) is a basic noise model used in information theory to mimic the effect of many random processes that occur in nature. The modifiers denote specific characteristics: * ''Additive'' because it is added to any noise that might be intrinsic to the information system. * ''White'' refers to the idea that it has uniform power across the frequency band for the information system. It is an analogy to the color white which has uniform emissions at all frequencies in the visible spectrum. * ''Gaussian'' because it has a normal distribution in the time domain with an average time domain value of zero. Wideband noise comes from many natural noise sources, such as the thermal vibrations of atoms in conductors (referred to as thermal noise or Johnson–Nyquist noise), shot noise, black-body radiation from the earth and other warm objects, and from celestial sources such as the Sun. The central limit theorem of probability theory indicates that the summation ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Bit Crossover Probability

The bit is the most basic unit of information in computing and digital communications. The name is a portmanteau of binary digit. The bit represents a logical state with one of two possible values. These values are most commonly represented as either , but other representations such as ''true''/''false'', ''yes''/''no'', ''on''/''off'', or ''+''/''−'' are also commonly used. The relation between these values and the physical states of the underlying storage or device is a matter of convention, and different assignments may be used even within the same device or program. It may be physically implemented with a two-state device. The symbol for the binary digit is either "bit" per recommendation by the IEC 80000-13:2008 standard, or the lowercase character "b", as recommended by the IEEE 1541-2002 standard. A contiguous group of binary digits is commonly called a ''bit string'', a bit vector, or a single-dimensional (or multi-dimensional) ''bit array''. A group of eight bit ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Binary Symmetric Channel

A binary symmetric channel (or BSCp) is a common communications channel model used in coding theory and information theory. In this model, a transmitter wishes to send a bit (a zero or a one), and the receiver will receive a bit. The bit will be "flipped" with a "crossover probability" of ''p'', and otherwise is received correctly. This model can be applied to varied communication channels such as telephone lines or disk drive storage. The noisy-channel coding theorem applies to BSCp, saying that information can be transmitted at any rate up to the channel capacity with arbitrarily low error. The channel capacity is 1 - \operatorname H_\text(p) bits, where \operatorname H_\text is the binary entropy function. Codes including Forney's code have been designed to transmit information efficiently across the channel. Definition A binary symmetric channel with crossover probability p, denoted by BSCp, is a channel with binary input and binary output and probability of error p. That i ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Forward Error Correction

In computing, telecommunication, information theory, and coding theory, an error correction code, sometimes error correcting code, (ECC) is used for controlling errors in data over unreliable or noisy communication channels. The central idea is the sender encodes the message with redundant information in the form of an ECC. The redundancy allows the receiver to detect a limited number of errors that may occur anywhere in the message, and often to correct these errors without retransmission. The American mathematician Richard Hamming pioneered this field in the 1940s and invented the first error-correcting code in 1950: the Hamming (7,4) code. ECC contrasts with error detection in that errors that are encountered can be corrected, not simply detected. The advantage is that a system using ECC does not require a reverse channel to request retransmission of data when an error occurs. The downside is that there is a fixed overhead that is added to the message, thereby requiring a ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |