|

Blahut–Arimoto Algorithm

The term Blahut–Arimoto algorithm is often used to refer to a class of algorithms for computing numerically either the information theoretic capacity of a channel, the rate-distortion function of a source or a source encoding (i.e. compression to remove the redundancy). They are iterative algorithms that eventually converge to one of the maxima of the optimization problem that is associated with these information theoretic concepts. History and application For the case of channel capacity, the algorithm was independently invented by Suguru Arimoto and Richard Blahut. In addition, Blahut's treatment gives algorithms for computing rate distortion and generalized capacity with input contraints (i.e. the capacity-cost function, analogous to rate-distortion). These algorithms are most applicable to the case of arbitrary finite alphabet sources. Much work has been done to extend it to more general problem instances. Recently, a version of the algorithm that accounts for continuo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Algorithm

In mathematics and computer science, an algorithm () is a finite sequence of Rigour#Mathematics, mathematically rigorous instructions, typically used to solve a class of specific Computational problem, problems or to perform a computation. Algorithms are used as specifications for performing calculations and data processing. More advanced algorithms can use Conditional (computer programming), conditionals to divert the code execution through various routes (referred to as automated decision-making) and deduce valid inferences (referred to as automated reasoning). In contrast, a Heuristic (computer science), heuristic is an approach to solving problems without well-defined correct or optimal results.David A. Grossman, Ophir Frieder, ''Information Retrieval: Algorithms and Heuristics'', 2nd edition, 2004, For example, although social media recommender systems are commonly called "algorithms", they actually rely on heuristics as there is no truly "correct" recommendation. As an e ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Richard Blahut

Richard Ednard Blahut''Richard E. Blahut'' was elected in 1990 as a member of in Electronics, Communication & Information Systems Engineering and Computer Science & Engineering for pioneering work in coherent emitter signal processing and for contributions to information theory and error control codes. (bo ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Lossy Compression

In information technology, lossy compression or irreversible compression is the class of data compression methods that uses inexact approximations and partial data discarding to represent the content. These techniques are used to reduce data size for storing, handling, and transmitting content. Higher degrees of approximation create coarser images as more details are removed. This is opposed to lossless data compression (reversible data compression) which does not degrade the data. The amount of data reduction possible using lossy compression is much higher than using lossless techniques. Well-designed lossy compression technology often reduces file sizes significantly before degradation is noticed by the end-user. Even when noticeable by the user, further data reduction may be desirable (e.g., for real-time communication or to reduce transmission times or storage needs). The most widely used lossy compression algorithm is the discrete cosine transform (DCT), first published by N ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Coordinate Descent

Coordinate descent is an optimization algorithm that successively minimizes along coordinate directions to find the minimum of a function. At each iteration, the algorithm determines a coordinate or coordinate block via a coordinate selection rule, then exactly or inexactly minimizes over the corresponding coordinate hyperplane while fixing all other coordinates or coordinate blocks. A line search along the coordinate direction can be performed at the current iterate to determine the appropriate step size. Coordinate descent is applicable in both differentiable and derivative-free contexts. Description Coordinate descent is based on the idea that the minimization of a multivariable function F(\mathbf) can be achieved by minimizing it along one direction at a time, i.e., solving univariate (or at least much simpler) optimization problems in a loop. In the simplest case of ''cyclic coordinate descent'', one cyclically iterates through the directions, one at a time, minimizing the ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Conditional Probability Distribution

In probability theory and statistics, the conditional probability distribution is a probability distribution that describes the probability of an outcome given the occurrence of a particular event. Given two jointly distributed random variables X and Y, the conditional probability distribution of Y given X is the probability distribution of Y when X is known to be a particular value; in some cases the conditional probabilities may be expressed as functions containing the unspecified value x of X as a parameter. When both X and Y are categorical variables, a conditional probability table is typically used to represent the conditional probability. The conditional distribution contrasts with the marginal distribution of a random variable, which is its distribution without reference to the value of the other variable. If the conditional distribution of Y given X is a continuous distribution, then its probability density function is known as the conditional density function. The prop ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Directed Information

Directed information is an information theory measure that quantifies the information flow from the random string X^n = (X_1,X_2,\dots,X_n) to the random string Y^n = (Y_1,Y_2,\dots,Y_n). The term ''directed information'' was coined by James Massey and is defined as :I(X^n\to Y^n) \triangleq \sum_^n I(X^i;Y_i, Y^) where I(X^;Y_i, Y^) is the conditional mutual information I(X_1,X_2,...,X_;Y_i, Y_1,Y_2,...,Y_). Directed information has applications to problems where causality plays an important role such as the capacity of channels with feedback, capacity of discrete memoryless networks, capacity of networks with in-block memory, gambling with causal side information, compression with causal side information, real-time control communication settings, and statistical physics. Causal conditioning The essence of directed information is causal conditioning. The probability of x^n causally conditioned on y^n is defined as :P(x^n, , y^n) \triangleq \prod_^n P(x_i, x^,y^). This is s ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

PLOS Computational Biology

''PLOS Computational Biology'' is a monthly peer-reviewed open access scientific journal covering computational biology. It was established in 2005 by the Public Library of Science in association with the International Society for Computational Biology (ISCB) in the same format as the previously established ''PLOS Biology'' and '' PLOS Medicine''. The founding editor-in-chief was Philip Bourne and the current ones are Feilim Mac Gabhann and Jason Papin. Format The journal publishes both original research and review articles. All articles are open access and licensed under the Creative Commons Attribution License. Since its inception, the journal has published the ''Ten Simple Rules'' series of practical guides, which has subsequently become one of the journal's most read article series. The ''Ten Simple Rules'' series then led to the ''Quick Tips'' collection, whose articles contain recommendations on computational practices and methods, such as dimensionality reduction for ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Rate–distortion Theory

Rate–distortion theory is a major branch of information theory which provides the theoretical foundations for lossy data compression; it addresses the problem of determining the minimal number of bits per symbol, as measured by the rate ''R'', that should be communicated over a channel, so that the source (input signal) can be approximately reconstructed at the receiver (output signal) without exceeding an expected distortion ''D''. Introduction Rate–distortion theory gives an analytical expression for how much compression can be achieved using lossy compression methods. Many of the existing audio, speech, image, and video compression techniques have transforms, quantization, and bit-rate allocation procedures that capitalize on the general shape of rate–distortion functions. Rate–distortion theory was created by Claude Shannon in his foundational work on information theory. In rate–distortion theory, the ''rate'' is usually understood as the number of bits per d ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

IEEE Transactions On Information Theory

''IEEE Transactions on Information Theory'' is a monthly peer-reviewed scientific journal published by the IEEE Information Theory Society. It covers information theory and the mathematics of communications. It was established in 1953 as ''IRE Transactions on Information Theory''. The editor-in-chief is Venugopal V. Veeravalli (University of Illinois Urbana-Champaign). As of 2007, the journal allows the posting of preprints on arXiv. According to Jack van Lint, it is the leading research journal in the whole field of coding theory. A 2006 study using the PageRank network analysis algorithm found that, among hundreds of computer science-related journals, ''IEEE Transactions on Information Theory'' had the highest ranking and was thus deemed the most prestigious. ''ACM Computing Surveys'', with the highest impact factor The impact factor (IF) or journal impact factor (JIF) of an academic journal is a type of journal ranking. Journals with higher impact factor values are consid ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Information Theory

Information theory is the mathematical study of the quantification (science), quantification, Data storage, storage, and telecommunications, communication of information. The field was established and formalized by Claude Shannon in the 1940s, though early contributions were made in the 1920s through the works of Harry Nyquist and Ralph Hartley. It is at the intersection of electronic engineering, mathematics, statistics, computer science, Neuroscience, neurobiology, physics, and electrical engineering. A key measure in information theory is information entropy, entropy. Entropy quantifies the amount of uncertainty involved in the value of a random variable or the outcome of a random process. For example, identifying the outcome of a Fair coin, fair coin flip (which has two equally likely outcomes) provides less information (lower entropy, less uncertainty) than identifying the outcome from a roll of a dice, die (which has six equally likely outcomes). Some other important measu ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

Suguru Arimoto

Suguru (written: , , or ) is a masculine Japanese given name. Notable people with the name include: *, Japanese footballer *, Japanese fencer *, Japanese baseball player and analyst *, Japanese footballer *, Japanese footballer *, Japanese footballer *, Japanese baseball player *, Japanese bobsledder *, Japanese long-distance runner Fictional characters *, a character from the ''Haikyu!!'' series with the position of captain and outside hitter from Nohebi Academy *Suguru Geto, an antagonist from the anime and manga series ''Jujutsu Kaisen'' * Suguru Kamoshida, an antagonist in the game ''Persona 5'' *, protagonist of the manga series ''Kinnikuman'' * Suguru Niragi, a (sort of) antagonist from the manga and series ‘’Alice In Borderland is a Japanese suspense manga series written and illustrated by Haro Aso. It was first serialized in Shogakukan's manga magazine ''Shōnen Sunday S'' from November 2010 to March 2015, and later moved to ''Weekly Shōnen Sunday'', whe ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |

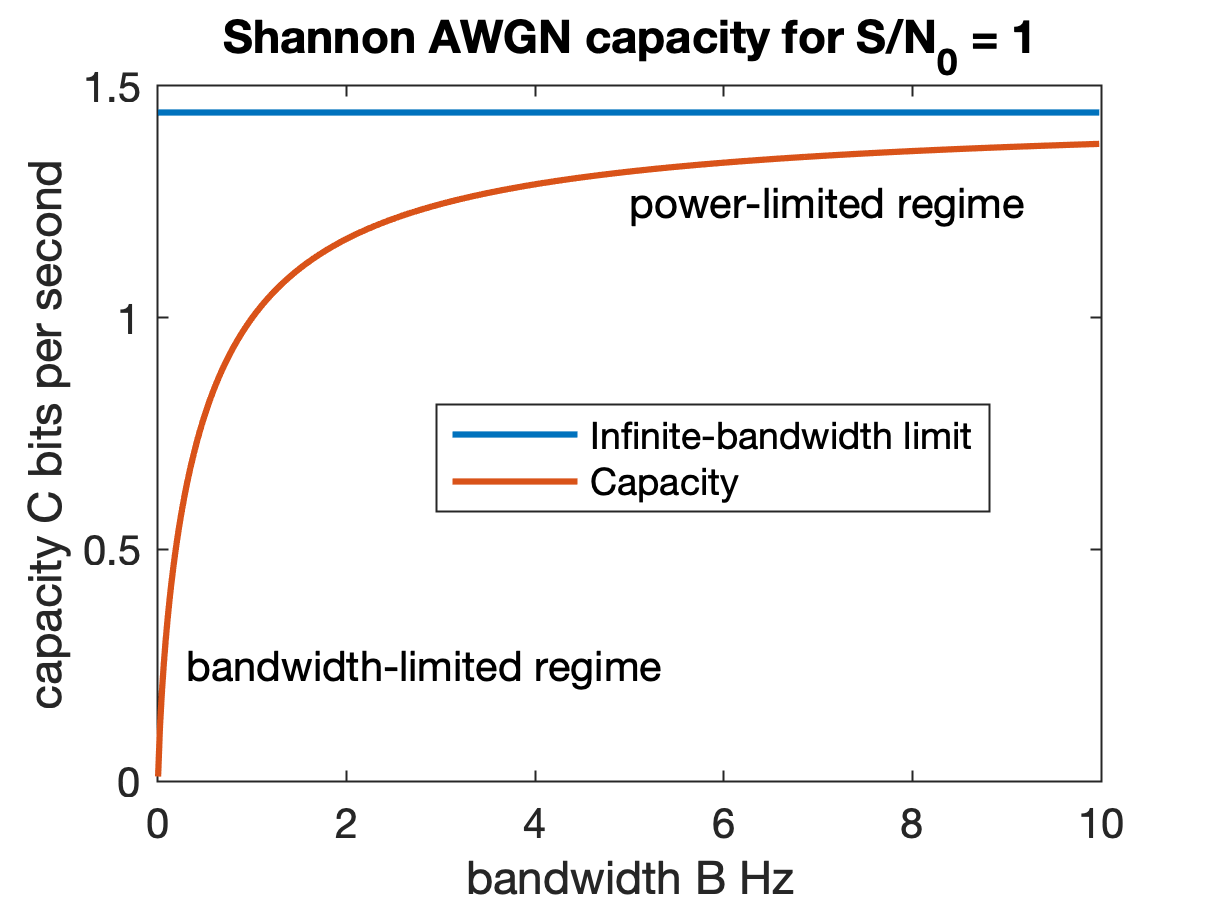

Channel Capacity

Channel capacity, in electrical engineering, computer science, and information theory, is the theoretical maximum rate at which information can be reliably transmitted over a communication channel. Following the terms of the noisy-channel coding theorem, the channel capacity of a given Channel (communications), channel is the highest information rate (in units of information entropy, information per unit time) that can be achieved with arbitrarily small error probability. Information theory, developed by Claude E. Shannon in 1948, defines the notion of channel capacity and provides a mathematical model by which it may be computed. The key result states that the capacity of the channel, as defined above, is given by the maximum of the mutual information between the input and output of the channel, where the maximization is with respect to the input distribution. The notion of channel capacity has been central to the development of modern wireline and wireless communication system ... [...More Info...] [...Related Items...] OR: [Wikipedia] [Google] [Baidu] |